Download png, svg

Check back later, or email me if you'd enjoy seeing this work in real-time.

Discover a new correlation

View all correlations

View all research papers

Report an error

Data details

The distance between Neptune and the moonDetailed data title: The average distance between Neptune and the moon as measured on the first day of each month

Source: Caclculated using Astropy

Additional Info: I wrote a Python script using Astropy to calculate the distance between the named planets on the first day of each month for every year.

See what else correlates with The distance between Neptune and the moon

Popularity of the first name Joel

Detailed data title: Babies of all sexes born in the US named Joel

Source: US Social Security Administration

See what else correlates with Popularity of the first name Joel

Correlation is a measure of how much the variables move together. If it is 0.99, when one goes up the other goes up. If it is 0.02, the connection is very weak or non-existent. If it is -0.99, then when one goes up the other goes down. If it is 1.00, you probably messed up your correlation function.

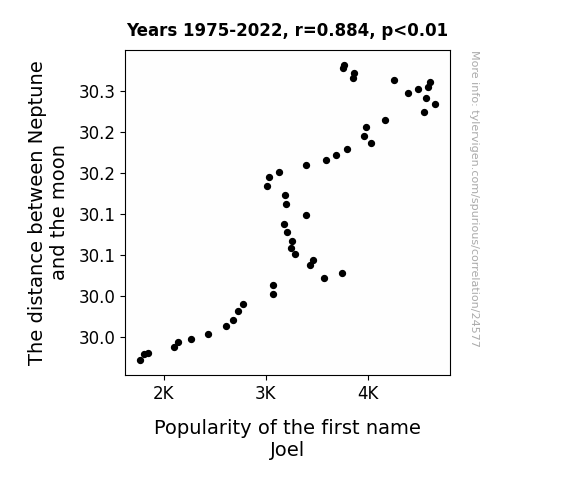

r2 = 0.7815690 (Coefficient of determination)

This means 78.2% of the change in the one variable (i.e., Popularity of the first name Joel) is predictable based on the change in the other (i.e., The distance between Neptune and the moon) over the 48 years from 1975 through 2022.

p < 0.01, which is statistically significant(Null hypothesis significance test)

The p-value is 8.4E-17. 0.0000000000000000838395955438

The p-value is a measure of how probable it is that we would randomly find a result this extreme. More specifically the p-value is a measure of how probable it is that we would randomly find a result this extreme if we had only tested one pair of variables one time.

But I am a p-villain. I absolutely did not test only one pair of variables one time. I correlated hundreds of millions of pairs of variables. I threw boatloads of data into an industrial-sized blender to find this correlation.

Who is going to stop me? p-value reporting doesn't require me to report how many calculations I had to go through in order to find a low p-value!

On average, you will find a correaltion as strong as 0.88 in 8.4E-15% of random cases. Said differently, if you correlated 11,927,538,456,188,922 random variables You don't actually need 11 quadrillion variables to find a correlation like this one. I don't have that many variables in my database. You can also correlate variables that are not independent. I do this a lot.

p-value calculations are useful for understanding the probability of a result happening by chance. They are most useful when used to highlight the risk of a fluke outcome. For example, if you calculate a p-value of 0.30, the risk that the result is a fluke is high. It is good to know that! But there are lots of ways to get a p-value of less than 0.01, as evidenced by this project.

In this particular case, the values are so extreme as to be meaningless. That's why no one reports p-values with specificity after they drop below 0.01.

Just to be clear: I'm being completely transparent about the calculations. There is no math trickery. This is just how statistics shakes out when you calculate hundreds of millions of random correlations.

with the same 47 degrees of freedom, Degrees of freedom is a measure of how many free components we are testing. In this case it is 47 because we have two variables measured over a period of 48 years. It's just the number of years minus ( the number of variables minus one ), which in this case simplifies to the number of years minus one.

you would randomly expect to find a correlation as strong as this one.

[ 0.8, 0.93 ] 95% correlation confidence interval (using the Fisher z-transformation)

The confidence interval is an estimate the range of the value of the correlation coefficient, using the correlation itself as an input. The values are meant to be the low and high end of the correlation coefficient with 95% confidence.

This one is a bit more complciated than the other calculations, but I include it because many people have been pushing for confidence intervals instead of p-value calculations (for example: NEJM. However, if you are dredging data, you can reliably find yourself in the 5%. That's my goal!

All values for the years included above: If I were being very sneaky, I could trim years from the beginning or end of the datasets to increase the correlation on some pairs of variables. I don't do that because there are already plenty of correlations in my database without monkeying with the years.

Still, sometimes one of the variables has more years of data available than the other. This page only shows the overlapping years. To see all the years, click on "See what else correlates with..." link above.

| 1975 | 1976 | 1977 | 1978 | 1979 | 1980 | 1981 | 1982 | 1983 | 1984 | 1985 | 1986 | 1987 | 1988 | 1989 | 1990 | 1991 | 1992 | 1993 | 1994 | 1995 | 1996 | 1997 | 1998 | 1999 | 2000 | 2001 | 2002 | 2003 | 2004 | 2005 | 2006 | 2007 | 2008 | 2009 | 2010 | 2011 | 2012 | 2013 | 2014 | 2015 | 2016 | 2017 | 2018 | 2019 | 2020 | 2021 | 2022 | |

| The distance between Neptune and the moon (Planetary distance (AU)) | 30.2819 | 30.2778 | 30.2715 | 30.2659 | 30.2628 | 30.2614 | 30.2554 | 30.2528 | 30.2475 | 30.2417 | 30.2341 | 30.2243 | 30.2147 | 30.2066 | 30.195 | 30.1871 | 30.1798 | 30.172 | 30.1664 | 30.1596 | 30.1517 | 30.1446 | 30.134 | 30.1231 | 30.1124 | 30.0986 | 30.088 | 30.0776 | 30.0667 | 30.0585 | 30.0516 | 30.0439 | 30.0382 | 30.0286 | 30.0215 | 30.0133 | 30.0021 | 29.9906 | 29.9823 | 29.9712 | 29.9634 | 29.9535 | 29.9482 | 29.9439 | 29.9383 | 29.9311 | 29.9291 | 29.9219 |

| Popularity of the first name Joel (Babies born) | 3772 | 3759 | 3870 | 3853 | 4255 | 4615 | 4588 | 4491 | 4396 | 4569 | 4663 | 4555 | 4171 | 3979 | 3964 | 4037 | 3798 | 3687 | 3587 | 3392 | 3125 | 3027 | 3008 | 3187 | 3201 | 3395 | 3182 | 3206 | 3256 | 3246 | 3285 | 3468 | 3430 | 3752 | 3567 | 3074 | 3069 | 2778 | 2725 | 2676 | 2606 | 2434 | 2266 | 2137 | 2098 | 1840 | 1800 | 1763 |

Why this works

- Data dredging: I have 25,237 variables in my database. I compare all these variables against each other to find ones that randomly match up. That's 636,906,169 correlation calculations! This is called “data dredging.” Instead of starting with a hypothesis and testing it, I instead abused the data to see what correlations shake out. It’s a dangerous way to go about analysis, because any sufficiently large dataset will yield strong correlations completely at random.

- Lack of causal connection: There is probably

Because these pages are automatically generated, it's possible that the two variables you are viewing are in fact causually related. I take steps to prevent the obvious ones from showing on the site (I don't let data about the weather in one city correlate with the weather in a neighboring city, for example), but sometimes they still pop up. If they are related, cool! You found a loophole.

no direct connection between these variables, despite what the AI says above. This is exacerbated by the fact that I used "Years" as the base variable. Lots of things happen in a year that are not related to each other! Most studies would use something like "one person" in stead of "one year" to be the "thing" studied. - Observations not independent: For many variables, sequential years are not independent of each other. If a population of people is continuously doing something every day, there is no reason to think they would suddenly change how they are doing that thing on January 1. A simple

Personally I don't find any p-value calculation to be 'simple,' but you know what I mean.

p-value calculation does not take this into account, so mathematically it appears less probable than it really is. - Y-axis doesn't start at zero: I truncated the Y-axes of the graph above. I also used a line graph, which makes the visual connection stand out more than it deserves.

Nothing against line graphs. They are great at telling a story when you have linear data! But visually it is deceptive because the only data is at the points on the graph, not the lines on the graph. In between each point, the data could have been doing anything. Like going for a random walk by itself!

Mathematically what I showed is true, but it is intentionally misleading. Below is the same chart but with both Y-axes starting at zero. - Outlandish outliers: There are "outliers" in this data.

In concept, "outlier" just means "way different than the rest of your dataset." When calculating a correlation like this, they are particularly impactful because a single outlier can substantially increase your correlation.

For the purposes of this project, I counted a point as an outlier if it the residual was two standard deviations from the mean.

(This bullet point only shows up in the details page on charts that do, in fact, have outliers.)

They stand out on the scatterplot above: notice the dots that are far away from any other dots. I intentionally mishandeled outliers, which makes the correlation look extra strong.

Try it yourself

You can calculate the values on this page on your own! Try running the Python code to see the calculation results. Step 1: Download and install Python on your computer.Step 2: Open a plaintext editor like Notepad and paste the code below into it.

Step 3: Save the file as "calculate_correlation.py" in a place you will remember, like your desktop. Copy the file location to your clipboard. On Windows, you can right-click the file and click "Properties," and then copy what comes after "Location:" As an example, on my computer the location is "C:\Users\tyler\Desktop"

Step 4: Open a command line window. For example, by pressing start and typing "cmd" and them pressing enter.

Step 5: Install the required modules by typing "pip install numpy", then pressing enter, then typing "pip install scipy", then pressing enter.

Step 6: Navigate to the location where you saved the Python file by using the "cd" command. For example, I would type "cd C:\Users\tyler\Desktop" and push enter.

Step 7: Run the Python script by typing "python calculate_correlation.py"

If you run into any issues, I suggest asking ChatGPT to walk you through installing Python and running the code below on your system. Try this question:

"Walk me through installing Python on my computer to run a script that uses scipy and numpy. Go step-by-step and ask me to confirm before moving on. Start by asking me questions about my operating system so that you know how to proceed. Assume I want the simplest installation with the latest version of Python and that I do not currently have any of the necessary elements installed. Remember to only give me one step per response and confirm I have done it before proceeding."

# These modules make it easier to perform the calculation

import numpy as np

from scipy import stats

# We'll define a function that we can call to return the correlation calculations

def calculate_correlation(array1, array2):

# Calculate Pearson correlation coefficient and p-value

correlation, p_value = stats.pearsonr(array1, array2)

# Calculate R-squared as the square of the correlation coefficient

r_squared = correlation**2

return correlation, r_squared, p_value

# These are the arrays for the variables shown on this page, but you can modify them to be any two sets of numbers

array_1 = np.array([30.2819,30.2778,30.2715,30.2659,30.2628,30.2614,30.2554,30.2528,30.2475,30.2417,30.2341,30.2243,30.2147,30.2066,30.195,30.1871,30.1798,30.172,30.1664,30.1596,30.1517,30.1446,30.134,30.1231,30.1124,30.0986,30.088,30.0776,30.0667,30.0585,30.0516,30.0439,30.0382,30.0286,30.0215,30.0133,30.0021,29.9906,29.9823,29.9712,29.9634,29.9535,29.9482,29.9439,29.9383,29.9311,29.9291,29.9219,])

array_2 = np.array([3772,3759,3870,3853,4255,4615,4588,4491,4396,4569,4663,4555,4171,3979,3964,4037,3798,3687,3587,3392,3125,3027,3008,3187,3201,3395,3182,3206,3256,3246,3285,3468,3430,3752,3567,3074,3069,2778,2725,2676,2606,2434,2266,2137,2098,1840,1800,1763,])

array_1_name = "The distance between Neptune and the moon"

array_2_name = "Popularity of the first name Joel"

# Perform the calculation

print(f"Calculating the correlation between {array_1_name} and {array_2_name}...")

correlation, r_squared, p_value = calculate_correlation(array_1, array_2)

# Print the results

print("Correlation Coefficient:", correlation)

print("R-squared:", r_squared)

print("P-value:", p_value)Reuseable content

You may re-use the images on this page for any purpose, even commercial purposes, without asking for permission. The only requirement is that you attribute Tyler Vigen. Attribution can take many different forms. If you leave the "tylervigen.com" link in the image, that satisfies it just fine. If you remove it and move it to a footnote, that's fine too. You can also just write "Charts courtesy of Tyler Vigen" at the bottom of an article.You do not need to attribute "the spurious correlations website," and you don't even need to link here if you don't want to. I don't gain anything from pageviews. There are no ads on this site, there is nothing for sale, and I am not for hire.

For the record, I am just one person. Tyler Vigen, he/him/his. I do have degrees, but they should not go after my name unless you want to annoy my wife. If that is your goal, then go ahead and cite me as "Tyler Vigen, A.A. A.A.S. B.A. J.D." Otherwise it is just "Tyler Vigen."

When spoken, my last name is pronounced "vegan," like I don't eat meat.

Full license details.

For more on re-use permissions, or to get a signed release form, see tylervigen.com/permission.

Download images for these variables:

- High resolution line chart

The image linked here is a Scalable Vector Graphic (SVG). It is the highest resolution that is possible to achieve. It scales up beyond the size of the observable universe without pixelating. You do not need to email me asking if I have a higher resolution image. I do not. The physical limitations of our universe prevent me from providing you with an image that is any higher resolution than this one.

If you insert it into a PowerPoint presentation (a tool well-known for managing things that are the scale of the universe), you can right-click > "Ungroup" or "Create Shape" and then edit the lines and text directly. You can also change the colors this way.

Alternatively you can use a tool like Inkscape. - High resolution line chart, optimized for mobile

- Alternative high resolution line chart

- Scatterplot

- Portable line chart (png)

- Portable line chart (png), optimized for mobile

- Line chart for only The distance between Neptune and the moon

- Line chart for only Popularity of the first name Joel

Your correlation rating is out of this world!

Correlation ID: 24577 · Black Variable ID: 1961 · Red Variable ID: 2204